Dr Tina Potter

Department of physics, University of Cambridge

https://www.phy.cam.ac.uk/directory/dr-tina-potter

https://www.hep.phy.cam.ac.uk/~chpotter/particleandnuclearphysics/mainpage.html

Astronomical observations tell us that dark matter makes up 27% of our Universe and experiences the gravitational force, yet we still know very little beyond this. The Large Hadron Collider (LHC) at CERN continues to search for new, exotic particles that could explain Dark Matter. Dr Tina Potter introduced the LHC and the largest of the four main detectors, ATLAS. She showed how and why scientists search for a rich array of new particles predicted by Supersymmetry and the latest results from these searches. As the LHC programme moves into its next stage, she explained what further secrets of the Universe could be uncovered.

Research Interests

Dr Potter’s personal research interests lie in the discovery of new physics Beyond the Standard Model. In particular, she wants to know what makes up 85% of the matter in our universe — the as-yet unexplained Dark Matter.

She is searching for signs of new particles that may briefly form in the high energy proton-proton collisions at the Large Hadron Collider. The lack of a discovery to date tells us that any new physics may not be easy to find; whether Dark Matter itself, or other new particles which decay to Dark Matter, the possible signatures of new particle production can be complex and difficult to pick out of the abundant Standard Model processes. Her research focuses on the design of novel and sensitive searches for new physics, such as Supersymmetry, using the ATLAS detector. Supersymmetry offers a potential solution by introducing many new particles, the lightest of which is an excellent dark matter candidate. She pushes the sensitivity of our searches for Supersymmetry and new physics in general, with the ultimate goal of a discovery of a new particle that will help us understand the composition of our universe.

Her research also involves understanding the potential for the discovery or characterisation of new physics scenarios at future colliders.

Below is the link to the video of the lecture:

The following are notes from the on-line lecture. Even though I could stop the video and go back over things there are likely to be mistakes because I haven’t heard things correctly or not understood them. I hope Dr Potter, and my readers will forgive any mistakes and let me know what I got wrong.

Biography

Dr. Potter was raised in Croydon.

Between 2001 and 2009 she completed a physics degree and PhD at Royal Holloway, University of London

https://www.royalholloway.ac.uk/

https://en.wikipedia.org/wiki/Royal_Holloway,_University_of_London

Royal Holloway, University of London (RHUL), formally incorporated as Royal Holloway and Bedford New College, is a public research university and a constituent college of the federal University of London. It has six schools, 21 academic departments and approximately 10,500 undergraduate and postgraduate students from over 100 countries. The campus is located west of Egham, Surrey, 31 km from central London.

Between 2009 and 2015 she held a post-doc position at the University of Sussex (more importantly she adopted two dogs during this period, oh and she had a baby).

https://en.wikipedia.org/wiki/University_of_Sussex

The University of Sussex is a public research university located in Falmer, Sussex, England. Its large campus site is surrounded by the South Downs National Park and is around 5.5 kilometres from central Brighton. The University received its Royal Charter in August 1959, the first of the plate glass university generation, and was a founding member of the 1994 Group of research-intensive universities.

Between 2015 and 2018 she had a temporary Early Careers Lectureship at the University of Cambridge (During this period she had a second baby)

In 2018 she gained a permanent lectureship at the University of Cambridge,

https://en.wikipedia.org/wiki/University_of_Cambridge

The University of Cambridge (legally, The Chancellor, Masters, and Scholars of the University of Cambridge) is a collegiate research university in Cambridge, United Kingdom. Founded in 1209 and granted a royal charter by King Henry III in 1231, Cambridge is the second-oldest university in the English-speaking world and the world’s fourth-oldest surviving university. The university grew out of an association of scholars who left the University of Oxford after a dispute with the townspeople.

https://en.wikipedia.org/wiki/Cavendish_Laboratory

The Cavendish Laboratory is the Department of Physics at the University of Cambridge, and is part of the School of Physical Sciences. The laboratory was opened in 1874 on the New Museums Site as a laboratory for experimental physics and is named after the British chemist and physicist Henry Cavendish. The laboratory has had a huge influence on research in the disciplines of physics and biology.

The laboratory moved to its present site in West Cambridge in 1974.

As of 2019, 30 Cavendish researchers have won Nobel Prizes. Notable discoveries to have occurred at the Cavendish Laboratory include the discovery of the electron, neutron, and structure of DNA.

What is high energy physics?

It is the study of the fundamental particles and forces, what they are and how they behave. For instance, millions of neutrinos are passing through us all the time and most of the Universe is made up of up and down quarks.

Fundamental means that the particle can’t be broken down into anything smaller.

https://www.newworldencyclopedia.org/entry/Fermion

There are 12 fundamental fermions, 3 fundamental forces and one particle responsible for mass generation. Together they make up the Standard Model.

In the Standard Model the 12 fundamental fermions are usually considered the building blocks of matter.

The 3 fundamental force particles and the Higgs particle (responsible for mass generation) are bosons.

Fermions are subdivided into quarks and leptons. Quarks are fermions that couple with a class of bosons known as gluons to form composite particles such as protons and neutrons. Leptons are those fermions that do not undergo coupling with gluons. Electrons are a well-known example of leptons.

Fermions come in pairs, and in three “generations.” Everyday matter is composed of the first generation of fermions: two leptons, the electron and electron-neutrino; and two quarks, called Up and Down.

In theoretical terms, one major difference between fermions and bosons is related to a property known as “spin.” Fermions have odd half-integer spin (1/2, 3/2, 5/2, and so forth), whereas bosons have integer spin (0, 1, 2, and so forth). (Here, “spin” refers to the angular momentum quantum number.) Fermions obey Fermi-Dirac statistics, which means that when one swaps two fermions, the wavefunction of the system changes sign.

Given that each fermion has half-integer spin, when an observer circles a fermion (or when the fermion rotates 360° about its axis), the wavefunction of the fermion changes sign. A related phenomenon is called an antisymmetric wavefunction behaviour of a fermion.

As particles with half-integer spin, fermions obey the Pauli exclusion principle: no two fermions can exist in the same quantum state at the same time. Thus, for more than one fermion to occupy the same place in space, certain properties (such as spin) of each fermion in the group must be different from the rest.

https://en.wikipedia.org/wiki/Standard_Model

The Standard Model of particle physics is the theory describing three of the four known fundamental forces (the electromagnetic, weak, and strong interactions, and not including the gravitational force) in the universe, as well as classifying all known elementary particles.

First generation quarks are the up and the down and make up most of the matter in the Universe. The neutron is made up of two down quarks and one up quark and the proton is made up of two Up quarks and one Down quark. The force keeping the quarks together is the gluon

https://en.wikipedia.org/wiki/Neutron

https://en.wikipedia.org/wiki/Proton

The quark structure of the neutron (left) and proton (right). The colour assignment of individual quarks is arbitrary, but all three colours must be present. Forces between quarks are mediated by gluons.

Second generation quarks are the charm and strange.

Third generation quarks are the top and bottom.

First generation leptons are the electron and electron neutrino.

https://en.wikipedia.org/wiki/Electron

Whereas the neutrons and the protons are found in the nucleus of atoms the electrons are found orbiting the outside of the nucleus.

https://en.wikipedia.org/wiki/Atom

An illustration of the helium atom, depicting the nucleus (pink) and the electron cloud distribution (black). The nucleus (upper right) in helium-4 is in reality spherically symmetric and closely resembles the electron cloud, although for more complicated nuclei this is not always the case. The black bar is one angstrom (10−10 m or 100 pm).

An atom is the smallest unit of ordinary matter that forms a chemical element.

Second generation leptons are the muon and muon neutrino

Third generation leptons are the tau and tau neutrino.

The three fundamental force particles are the gluon, responsible for the strong force that keeps the quarks together, the photon, responsible for the electromagnetic force, and the W/Z particles, responsible for the weak force.

The particle responsible for mass generation (why sub-atomic particles have mass) is the famous Higgs particle.

https://en.wikipedia.org/wiki/Gluon

https://en.wikipedia.org/wiki/Photon

https://en.wikipedia.org/wiki/W_and_Z_bosons

https://en.wikipedia.org/wiki/Higgs_boson

The Big Bang

https://www.quantumdiaries.org/wp-content/uploads/2013/07/BigBang.jpeg

When particle physicists are looking for the tiniest particle, they are in fact looking back in time and their research involves trying to recreate the early Universe. Using high energy to reproduce the conditions of the early Universe, High energy physics is a bit like having a time machine,

It is now widely accepted that all matter (dark and visible) started out being uniformly distributed just after the Big Bang. In short, a rapid expansion followed where the Universe cooled down and particles slowed down enough to form nuclei three minutes after the Big Bang. The first atoms appeared 300 000 years later while galaxies formed between a hundred and a thousand million years later.

Dark matter, if it exists, is heavier than regular matter and slowed down earlier. Small quantum fluctuations eventually turned into small lumps of dark matter. These lumps attracted more dark matter under the effect of the gravitational attraction, in a very slow snowball effect. Since dark matter also interacts very weakly, these planted seeds survived well through the stormy moments of the early Universe.

Once matter cooled off as the Universe expanded, it started accumulating on the lumps of dark matter. Hence, dark matter planted the seeds for galaxies. All this could have happened without dark matter, although it would have taken much more time.

https://www.universetoday.com/54756/what-is-the-big-bang-theory/

The Big questions

1) What are dark matter and dark energy?

2) What is responsible for the matter-antimatter asymmetry?

https://en.wikipedia.org/wiki/CP_violation

In particle physics, CP violation is a violation of CP-symmetry (or charge conjugation parity symmetry): the combination of C-symmetry (charge symmetry) and P-symmetry (parity symmetry). CP-symmetry states that the laws of physics should be the same if a particle is interchanged with its antiparticle (C symmetry) while its spatial coordinates are inverted (“mirror” or P symmetry).

It plays an important role both in the attempts of cosmology to explain the dominance of matter over antimatter in the present universe, and in the study of weak interactions in particle physics.

Just after the Big Bang the quantities of matter and antimatter were the same. This should be the case now so something must have happened or something must be different for there to be an excess of matter. Some difference between the two.

There are some sources that could explain the difference.

3) Is there structure in flavour sector?

https://en.wikipedia.org/wiki/Flavour_(particle_physics)

In particle physics, flavour refers to the species of an elementary particle. The Standard Model counts six flavours of quarks and six flavours of leptons.

The flavour sector leads to masses and mixing of quarks and leptons.

https://ncatlab.org/nlab/show/flavour+(particle+physics)

There are 22 free parameters to describe this mixing/

The flavour sector is the most puzzling part of the standard model.

Broadly, the flavour problem is the fact that the nature and principles behind the flavour sector of the standard model are not understood very well. More concretely, the flavour problem in models going beyond the standard model, is that introducing any New Physics while satisfying observational constraints on flavour physics seems to demand a high level of fine-tuning in the flavour sector.

Moreover, all observed CP violation is related to flavour-changing interactions.

Finally, due to confinement, the flavour-changing transitions between quarks are not seen in isolation by collider experiments, but are only seen via the induced decays of the mesons and/or baryons that the quarks are bound in.

All this suggests that the flavour sector is controlled by mechanisms that are not understood or identified yet.

https://ncatlab.org/nlab/show/flavour+anomaly

In the current standard model of particle physics the fundamental particles in the three generations of fermions have identical properties from one generation to the next, except for their mass, a state of affairs referred to as lepton flavour universality (LFU). A possible violation of lepton flavour universality is called a flavour anomaly, which would be a sign of “New Physics” (NP) beyond the standard model.

The presence – or not – of flavour anomalies is part of the general flavour problem of the standard model of particle physics.

4) Why is the Higgs mass so low?

https://en.wikipedia.org/wiki/Higgs_boson

The Higgs boson is an elementary particle in the Standard Model of particle physics, produced by the quantum excitation of the Higgs field, one of the fields in particle physics theory. It is named after physicist Peter Higgs, who in 1964, along with five other scientists, proposed the Higgs mechanism to explain why particles have mass. This mechanism implies the existence of the Higgs boson. The Higgs boson was initially discovered as a new particle in 2012 by the ATLAS and CMS collaborations based on collisions in the LHC at CERN, and the new particle was subsequently confirmed to match the expected properties of a Higgs boson over the following years.

https://en.wikipedia.org/wiki/Hierarchy_problem#The_Higgs_mass

In theoretical physics, the hierarchy problem is the large discrepancy between aspects of the weak force and gravity. There is no scientific consensus on why, for example, the weak force is 1024 times stronger than gravity.

Why is the Higgs boson so much lighter than it should be? One would expect that the large quantum contributions to the square of the Higgs boson mass would inevitably make the mass huge, comparable to the scale at which new physics appears, unless there is some incredible fine-tuning.

It should be remarked that the problem cannot even be formulated in the strict context of the Standard Model, for the Higgs mass cannot be calculated. In a sense, the problem amounts to the worry that a future theory of fundamental particles, in which the Higgs boson mass will be calculable, should not have excessive fine-tunings.

One proposed solution, popular amongst many physicists, is that one may solve the hierarchy problem via supersymmetry. Supersymmetry can explain how a tiny Higgs mass can be protected from quantum corrections. Supersymmetry removes the power-law divergences of the radiative corrections to the Higgs mass and solves the hierarchy problem as long as the supersymmetric particles are light enough to satisfy certain criteria. This still leaves some other problems including the fact that the LHC has not found any evidence for supersymmetry so far.

https://en.wikipedia.org/wiki/Peter_Higgs

Peter Ware Higgs CH FRS FRSE FInstP (born 29 May 1929) is a British theoretical physicist, Emeritus Professor in the University of Edinburgh, and Nobel Prize laureate for his work on the mass of subatomic particles.

5) Can we unify the strong and electroweak forces?

https://en.wikipedia.org/wiki/Grand_Unified_Theory

A Grand Unified Theory (GUT) is a model in particle physics in which, at high energies, the three-gauge interactions of the Standard Model comprising the electromagnetic, weak, and strong forces are merged into a single force. Although this unified force has not been directly observed, the many GUT models theorize its existence. If unification of these three interactions is possible, it raises the possibility that there was a grand unification epoch in the very early universe in which these three fundamental interactions were not yet distinct.

Experiments have confirmed that at high energy the electromagnetic interaction and weak interaction unify into a single electroweak interaction. GUT models predict that at even higher energy, the strong interaction and the electroweak interaction will unify into a single electronuclear interaction. This interaction is characterized by one larger gauge symmetry and thus several force carriers, but one unified coupling constant. Unifying gravity with the electronuclear interaction would provide a more comprehensive theory of everything (TOE) rather than a Grand Unified Theory. Thus, GUTs are often seen as an intermediate step towards a TOE.

So far, there is no generally accepted GUT model.

6) How can we include gravity?

At the moment the standard model doesn’t include gravity. When physicists are talking about high energy physics, they are talking about 3 fundamental forces. The force we are most familiar with is gravity, and it is missing. There is not theory, yet, that links gravity with a particle exchange.

https://en.wikipedia.org/wiki/Physics_beyond_the_Standard_Model

Physics beyond the Standard Model (BSM) refers to the theoretical developments needed to explain the deficiencies of the Standard Model, such as the strong CP problem, neutrino oscillations, matter–antimatter asymmetry, and the nature of dark matter and dark energy.

The standard model does not explain gravity. The approach of simply adding a graviton to the Standard Model does not recreate what is observed experimentally without other modifications, as yet undiscovered, to the Standard Model. Moreover, the Standard Model is widely considered to be incompatible with the most successful theory of gravity to date, general relativity.

A theory of quantum gravitation, or more formally quantum geometrodynamics (QGD), does not yet exist. Incorporating gravity into particle physics looks to be a horrendous challenge.

Gravity is weak enough to ignore in most situations that particle physicists think about, but under certain circumstances (near black holes or in the early universe, for example) it becomes very important to have a quantum theory of gravity to help us understand how gravitation fits in with the other forces.

So, at the moment we can’t include gravity into the standard model.

Dark matter

We don’t yet know what dark matter and dark energy are but we do know they make up most of the Universe.

https://www.researchgate.net/figure/Current-Energy-Composition-of-the-Universe_fig3_280792843

Dark matter is not any of the 12 fermions. It is something relatively new and physicists are trying to understand what it is.

https://en.wikipedia.org/wiki/Galaxy_rotation_curve

The rotation curve of a disc galaxy (also called a velocity curve) is a plot of the orbital speeds of visible stars or gas in that galaxy versus their radial distance from that galaxy’s centre. It is typically rendered graphically as a plot, and the data observed from each side of a spiral galaxy are generally asymmetric, so that data from each side are averaged to create the curve. A significant discrepancy exists between the experimental curves observed, and a curve derived by applying gravity theory to the matter observed in a galaxy. Theories involving dark matter are the main postulated solutions to account for the variance.

Galaxy rotation should be proportional to the mass inside the galaxy. It would be expected that the mass of all the stars, planets, gases etc. that we can see would give a peak rotation speed, which would then fall away with distance.

But these stars are observed moving around and their speed just seems to increase,

The only explanation for this increase without the galaxy being pulled apart is that there must be more mass than we can see. It is called dark matter because we can’t see it in the normal way, through a telescope. The only way we can be aware of it is because of its gravitational effect on the visible matter around it

Rotation curve of spiral galaxy Messier 33 (yellow and blue points with error bars), and a predicted one from distribution of the visible matter (grey line). The discrepancy between the two curves can be accounted for by adding a dark matter halo surrounding the galaxy.

Galactic rotation curves imply far more mass than expected from normal matter. Greater rotational speeds are due to the presence of dark matter.

Left: A simulated galaxy without dark matter. Right: Galaxy with a flat rotation curve that would be expected under the presence of dark matter.

Another probe that can be used to investigate dark matter is the cosmic microwave background. The measurements suggest we are in a dark Universe.

https://en.wikipedia.org/wiki/Cosmic_microwave_background

The cosmic microwave background (CMB, CMBR), in Big Bang cosmology, is electromagnetic radiation which is a remnant from an early stage of the universe. It’s like an echo from something that happened in the very early Universe.

https://en.wikipedia.org/wiki/Dark_matter#Cosmic_microwave_background

Although both dark matter and ordinary matter are matter, they do not behave in the same way. In particular, in the early universe, ordinary matter was ionized and interacted strongly with radiation via Thomson scattering. Dark matter does not interact directly with radiation, but it does affect the CMB by its gravitational potential (mainly on large scales), and by its effects on the density and velocity of ordinary matter. Ordinary and dark matter perturbations, therefore, evolve differently with time and leave different imprints on the cosmic microwave background (CMB).

Planck 2015 CMB power spectra. The shape is very important. The first peak shows a certain geometry of the Universe.

2015 CMB TT spectrum and best-fit model [Feb 2015]

Old fashioned CRO TVs could pick the CMB. It looked like interference.

Examining the CMB allows physicists to investigate the constituents of the Universe,

The standard model offers no suitable dark matter candidate. It is not a charged fermion, boson (these can decay) or neutrino (these are too fast and too light).

Dark matter must be stable otherwise their decay products may be detected. They must be neutral, heavy and weakly interacting (we can’t detect it with telescopes),

Dark matter can’t involve photons otherwise we could see it.

How can dark matter be created and detected?

Dark matter exists within galaxies, which is why they rotate faster than expected.

Galaxies travel through a dark matter wind.

Some dark matter detectors are found under mountains. The detectors are looking for high energy photons that might have been produced from dark matter collisions, high energy gamma rays.

Dr Potts work involves smashing standard model particles together to try and make dark matter particles and she uses the LHC. The experiments would infer that dark matter particles if there is a momentum/energy inbalance.

The ghostly nature of dark matter presents a real challenge.

The search for dark matter is taking place at the Large Hadron Collider, using the ATLAS and CMS detectors.

Experimentally, three approaches are pursued today to probe the WIMP dark matter particle hypothesis:

production at particle accelerators, indirectly by searching for signals from annihilation products, or directly via scattering on target nuclei. The image below shows a schematic representation of the coupling of the standard model and dark model particles, illustrating possible dark matter detection channels.

Searches for dark matter at colliders require the dark matter particle and its mediator to be within the energetic reach of the collider itself, a limitation not present in direct and indirect searches. By contrast, direct and indirect searches are less sensitive to low mass dark matter and generally are affected by larger experimental uncertainties, due to uncertainties in the knowledge of the distribution of dark matter in the universe.

Schematic showing the possible dark matter detection channels.

All this makes collider searches for dark matter highly complementary and competitive to direct and indirect dark matter detection methods, and the ATLAS and CMS experiments at the Large Hadron Collider (LHC) are in a privileged position to try to provide an answer to the dark matter problem.

https://en.wikipedia.org/wiki/Weakly_interacting_massive_particles

Weakly interacting massive particles (WIMPs) are hypothetical particles that are one of the proposed candidates for dark matter. There exists no clear definition of a WIMP, but broadly, a WIMP is a new elementary particle which interacts via gravity and any other force (or forces), potentially not part of the Standard Model itself, which is as weak as or weaker than the weak nuclear force, but also non-vanishing in its strength.

The experiments are looking for very rare processes

Protons are collided at high energies, around 13TeV and the aim is to create dark matter particles where their presence is inferred from energy and momentum conservation. The experiment is repeated billions of times.

The probability of creating dark matter particles in one collision is extremely low which is why billions of collisions are needed.

Computers generate the paths of predicted particles formed

https://www.bnl.gov/newsroom/news.php?a=111734

Display of a proton-proton collision event recorded by ATLAS on 3 June 2015, with the first LHC stable beams at a collision energy of 13 TeV. Tracks reconstructed from hits in the inner tracking detector are shown as arcs curving in the solenoidal magnetic field. The green and yellow bars indicate energy deposits in the liquid argon and scintillating-tile calorimeters. Image credit: CERN

The image below shows a candidate Higgs event in the ATLAS detector. Even with the clear signatures and transverse tracks, there is a shower of other particles; this is due to the fact that protons are composite particles. (The ATLAS collaboration / CERN)

Computer generated paths of proton-proton collisions and the resulting particles.

A great deal of effort is required to work out what it means.

The Large Hadron Collider

A hadron is made up of two or more quarks. It is subdivided into baryons and mesons.

A baryon is a type of composite subatomic particle which contains an odd number of valence quarks (at least 3). The most common baryons are the protons and neutrons.

A meson is made up of a quark and an anti-quark

https://home.cern/science/accelerators/large-hadron-collider

https://en.wikipedia.org/wiki/Large_Hadron_Collider

The Large Hadron Collider (LHC) is the world’s largest and highest-energy particle collider and the largest machine in the world. It was built by the European Organization for Nuclear Research (CERN) between 1998 and 2008 in collaboration with over 10,000 scientists and hundreds of universities and laboratories, as well as more than 100 countries. It lies in a tunnel 27 kilometres in circumference and as deep as 175 metres beneath the France–Switzerland border near Geneva.

It is a historic and successful global collaboration of particle physicists, engineers, accelerator scientists etc.

For proton-proton collisions hydrogen is ionised for the proton beam that circulates around the accelerator complex.

https://www.lhc-closer.es/taking_a_closer_look_at_lhc/0.proton_source

The process is simplified as follows:

For the LHC beam:

2808 bunches x 1.15 x 1011 = 3 x 1014 protons per beam (6 x 1014 protons for the two beams)

A single cubic centimetre of hydrogen gas at room temperature, with a pressure of 105 Pa, a volume of 10-6 m3, absolute temperature of 293 K and using the following formula PV = nRT (R is the molar gas constant = 8.3144598 m2 kg s-2 K-1 mol-1) gives the number of moles of hydrogen as 4 x 10-5.

The number of hydrogen molecules N = n x Avogadros’s number = 4 x 10-5 x 6 x 1023 = 2.4 x 1019 molecules. So, about 5 x 1019 atoms of hydrogen

Taking into account the above information, the LHC can be refilled about 100000 times with just one cubic centimetre of gas – and it only needs refilling twice a day!

The particles are accelerated by a 90 kV supply and leave the Duoplasmatron with 1.4% speed of light, i.e. ~ 4000 km/s.

Then they are sent to a radio frequency quadrupole, QRF -an accelerating component that both speeds up and focuses the particle beam. From the quadrupole, the particles are sent to the linear accelerator (LINAC2).

First collisions were achieved in 2010 at an energy of 3.5 teraelectronvolts (TeV) per beam, about four times the previous world record. After upgrades it reached 6.5 TeV per beam (13 TeV total collision energy, the present world record). At the end of 2018, it entered a two-year shutdown period for further upgrades although this will probably be extended due to the Covid-19 pandemic).

Collide beams at for points around the main 27km ring

CMS is looking for new particles and dark matter

https://home.cern/science/experiments/cms

https://en.wikipedia.org/wiki/Compact_Muon_Solenoid

LHCb is looking at matter and anti-matter

https://home.cern/science/experiments/lhcb

https://en.wikipedia.org/wiki/LHCb_experiment

https://www.researchgate.net/figure/The-ATLAS-detector-and-subsystems_fig1_48410331

ATLAS is looking for new particles and dark matter

https://en.wikipedia.org/wiki/ATLAS_experiment

The ATLAS Open Data project uses data from the Large Hadron Collider at CERN which has been made publicly available. You can have a go at looking for things too.

ALICE collides lead ions to investigate quark-gluon plasma

https://home.cern/science/experiments/alice

https://en.wikipedia.org/wiki/ALICE_experiment

The collider has four crossing points, around which are positioned seven detectors, each designed for certain kinds of research. The LHC primarily collides proton beams, but it can also use beams of heavy ions: lead–lead collisions and proton–lead collisions are typically done for one month per year. The aim of the LHC’s detectors is to allow physicists to test the predictions of different theories of particle physics, including measuring the properties of the Higgs boson and searching for the large family of new particles predicted by supersymmetric theories, as well as other unsolved questions of physics.

All the experiments share beam time and data.

So far, the LHC has confirmed the standard model at high energy and discovered the Higgs boson. It is hoping to observe new forces and particles and new symmetries in the nature. It is hoping to produce dark matter.

The 27km ring relies on 1,232 superconducting dipole magnets to keep the beams on their circular path. They consist of coils made of copper-clad niobium-titanium cables reaching a field strength of 8T. They are operated at 1.9 K.

https://en.wikipedia.org/wiki/Superconducting_magnet

A superconducting magnet is an electromagnet made from coils of superconducting wire. They must be cooled to cryogenic temperatures during operation. In its superconducting state the wire has no electrical resistance and therefore can conduct much larger electric currents than ordinary wire, creating intense magnetic fields. Superconducting magnets can produce greater magnetic fields than all but the strongest non-superconducting electromagnets and can be cheaper to operate because no energy is dissipated as heat in the windings.

There is an additional 392 quadrupole magnets used to keep the beams focused, with stronger quadrupole magnets close to the intersection points in order to maximize the chances of interaction where the two beams cross. Magnets of higher multipole orders are used to correct smaller imperfections in the field geometry. In total, about 10,000 superconducting magnets are installed, with the dipole magnets having a mass of about 35 tonnes.

Approximately 120 tonnes of superfluid helium-4 is needed to keep the magnets, made of copper-clad niobium-titanium, at their operating temperature of 1.9 K (−271.25 °C), making the LHC the largest cryogenic facility in the world at liquid helium temperature. LHC uses 470 tonnes of Nb–Ti superconductor.

The ATLAS Detector

https://en.wikipedia.org/wiki/ATLAS_experiment

ATLAS (A Toroidal LHC ApparatuS) is the largest, general-purpose particle detector experiment at the Large Hadron Collider (LHC), a particle accelerator at CERN (the European Organization for Nuclear Research) in Switzerland. The experiment is designed to take advantage of the unprecedented energy available at the LHC and observe phenomena that involve highly massive particles which were not observable using earlier lower-energy accelerators. ATLAS was one of the two LHC experiments involved in the discovery of the Higgs boson in July 2012. It was also designed to search for evidence of theories of particle physics beyond the Standard Model.

The experiment is a collaboration involving roughly 3,000 physicists from 183 institutions in 38 countries. The project was led for the first 15 years by Peter Jenni, between 2009 and 2013 was headed by Fabiola Gianotti and from 2013 to 2017 by David Charlton. The ATLAS Collaboration is currently led by Karl Jakobs

The ATLAS detector is 46 metres long, 25 metres in diameter, and weighs about 7,000 tonnes; it contains some 3000 km of cable.

ATLAS consist of cathedral size detectors

Computer generated cut-away view of the ATLAS detector showing its various components

(1) Muon Detectors

Magnet system:

(2) Toroid Magnets

(3) Solenoid Magnet

Inner Detector:

(4) Transition Radiation Tracker

(5) Semi-Conductor Tracker

(6) Pixel Detector

Calorimeters:

(7) Liquid Argon Calorimeter

(8) Tile Calorimeter

ATLAS has specialised layers for particular particle identification and measurement

https://cds.cern.ch/record/1096081

The data comes out very quickly so computers have to be able to cope.

Sensor technology and fast electronics:

100 million channels x 40 million Hz events

There are challenges in software and computing power. Decision was taken to keep collisions lasting between 2ms and 0.7s. 300Hz events are written to disk.

200PB+ data storage and distribution

Supersymmetry

Hopefully this will provide answers to problems as the researchers can find them.

https://en.wikipedia.org/wiki/Supersymmetry

In particle physics, supersymmetry (SUSY) is a conjectured relationship between two basic classes of elementary particles: bosons, which have an integer-valued spin, and fermions, which have a half-integer spin. A type of spacetime symmetry, supersymmetry is a possible candidate for undiscovered particle physics, and seen by some physicists as an elegant solution to many current problems in particle physics if confirmed correct, which could resolve various areas where current theories are believed to be incomplete. A supersymmetrical extension to the Standard Model could resolve major hierarchy problems within gauge theory, by guaranteeing that quadratic divergences of all orders will cancel out in perturbation theory.

In supersymmetry, each particle from one group would have an associated particle in the other, known as its superpartner, the spin of which differs by a half-integer. These superpartners would be new and undiscovered particles; for example, there would be a particle called a “selectron” (superpartner electron), a bosonic partner of the electron. In the simplest supersymmetry theories, with perfectly “unbroken” supersymmetry, each pair of superpartners would share the same mass and internal quantum numbers besides spin. Since we expect to find these “superpartners” using present-day equipment, if supersymmetry exists then it consists of a spontaneously broken symmetry, allowing superpartners to differ in mass. Spontaneously broken supersymmetry could solve many problems in particle physics, including the hierarchy problem.

There is no experimental evidence that supersymmetry is correct, or whether or not other extensions to current models might be more accurate. In part, this is because it is only since around 2010 that particle accelerators specifically designed to study physics beyond the Standard Model have become operational (i.e. the LHC), and because it is not yet known where exactly to look, nor the energies required for a successful search.

The main reasons for supersymmetry being supported by some physicists is that the current theories are known to be incomplete and their limitations are well established, and supersymmetry could be an attractive solution to some of the major concerns.

Charginos and neutralinos are mixtures of Winos, Binos and Higgsinos. If R-parity is conserved. LSP is stable

https://en.wikipedia.org/wiki/R-parity

R-parity is a concept in particle physics. In the Minimal Supersymmetric Standard Model, baryon number and lepton number are no longer conserved by all of the renormalizable couplings in the theory. Since baryon number and lepton number conservation have been tested very precisely, these couplings need to be very small in order not to be in conflict with experimental data.

With R-parity being preserved, the lightest supersymmetric particle (LSP) cannot decay. This lightest particle (if it exists) may therefore account for the observed missing mass of the universe that is generally called dark matter. In order to fit observations, it is assumed that this particle has a mass of 100 GeV/c2 to 1 TeV/c2, is neutral and only interacts through weak interactions and gravitational interactions. It is often called a weakly interacting massive particle or WIMP.

Typically, the dark matter candidate of the MSSM is an admixture of the electroweak gauginos and Higgsinos and is called a neutralino. In extensions to the MSSM it is possible to have a sneutrino be the dark matter candidate. Another possibility is the gravitino, which only interacts via gravitational interactions and does not require strict R-parity. Note that there are different forms of parity with different effects and principles, one should not confuse this parity with another parity.

https://en.wikipedia.org/wiki/Minimal_Supersymmetric_Standard_Model

Dark matter creation at the LHC is highly model-dependent

SUSY offers:

An excellent dark matter candidate; A natural solution to the hierarchy problem; Unification of all the forces; A rich field for further searches

The Higgs discovery in 2012 helped to constrain SUSY and SUSY may help to stabilise the Higgs mass!

https://en.wikipedia.org/wiki/Higgsino

In particle physics, a higgsino is the superpartner of the Higgs field.

For “natural SUSY” there is a light higgsino (≲800 GeV), a light stop (<1TeV) and light gluinos (<1-2GeV)

Weak-scale should be seen at the LHC

Supersymmetry at the LHC

Dr Potter is interested in the SUSY versions of bosons

The lightest SUSY particle is a good dark matter candidate. It needs to be neutral, heavy and stable. It is hoped that if found it will be able to answer the question of why the Higgs particle is so light.

https://www.thphys.uni-heidelberg.de/~plehn/index.php?show=prospino

Prospino2 is a computer program which computes next-to-leading order cross sections for the production of supersymmetric particles at hadron colliders. The processes currently included are squark, gluino, stop, neutralino/chargino, and slepton pair production. We have also included the associated production of squarks with gluinos and of neutralinos/charginos with gluinos or squarks and most recently leptoquark pair production. This list is likely to be expanded.

The LHC should have enough energy to create these SUSY particles and there are a lot of new particles to go hunting for.

Squarks should have a large rate of production, if they exist.

They have not been seen yet, either because they are too heavy or because they look too much like standard model particles.

Dr Potter is looking for the hardest ones to spot as they will have the lowest rate of production. She is hoping that her chosen particles will be produced at the LHC.

Supersymmetry is complicated with a lot of parameters.

Researchers are looking at a simplified supersymmetry model and are assuming a particular mass

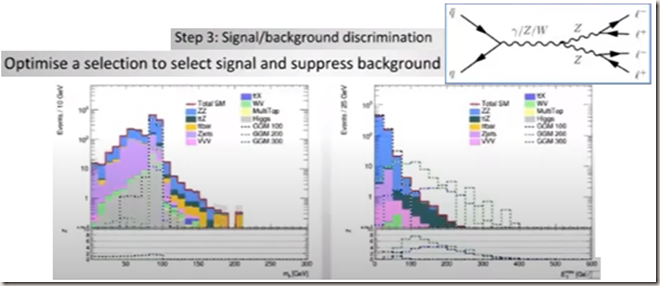

Electroweak SUSY production and decay to multilepton + ETmiss (missing transverse momentum) signatures

Infer presence of weakly interacting particles in LHC events by looking for missing transverse energy…..may be composed of one or more objects, which may differ

The project needs to design a broad search using simplified models – with specific decay chains, 100% BR, [masses, s]

BR is branching ratio, the fraction of time a particle decays to a particular final state

The cross section, s, is the probability for decay to happen

https://www2.ph.ed.ac.uk/~vjm/Lectures/ParticlePhysics2010_files/Particle3-2Nov.pdf

How to search for SUSY signal/background discriminating variable to target specific SUSY events.

Decay can take place through sleptons, or the known standard methods of W/Z bosons.

Design a very broad search using a simplified model. Build signal regions (SRs) based on the requirements on the signal/background discriminating variables to target SUSY events, hopefully, expecting to produce a signal different to the standard model. Optimise them for discovery and exclusion.

Pick particular masses of SUSY particles and pick particular decay chains.

For instance, calculate the rate at which the particles should be produced, assume they have a particular mass, assume they are unstable and that any particles produced will decay down to the dark matter candidate, which you have assumed has a certain mass.

The control regions (CRs) normalise the data and the validation regions validated (or not) the data. They look for background estimations and/or check the modelling of the background.

Researchers could have a clean production of the SUSY particles or they could produce them in addition to other particles which would complicate the final state they are looking for.

The decays they are looking for could produce the SUSY particles which could be fast or long lived. They also have to decide what these particles are decaying down to.

They could be looking for electrons, muons, taus or they could be looking for quarks, which would look different in the detector.

But one aspect of supersymmetry that researchers should be looking for are missing energies. There should be some imbalance in the particles being produced in collisions and maybe an absence of particles in one particular direction. That would be the smoking gun for a SUSY signature.

All this information needs to be put together for a search strategy.

https://indico.in2p3.fr/event/18346/attachments/51850/67413/Jackson_MarselleSeminar_Feb2019.pdf

ISR = initial-state radiation

VBF = vector boson fusion

The idea of VBF is that, as the protons come into the collision, two parts of the proton (cleverly called “partons,” these can be quarks, antiquarks, or gluons) have a glancing collision. Usually, when a collision happens at the LHC, the partons sort of hit “head-on” and transfer all their energy into whatever is about to be created. In VBF, the two partons come in, then radiate off force-carrying particles called “vector bosons.” Photons are vector bosons, but so are gluons (the force carriers of the strong nuclear force), and the W and Z bosons which carry the weak nuclear force. These two vector bosons then collide with each other (that’s the fusion part of vector boson fusion), and may create some new interesting physics. The incoming partons get slightly deflected by this exchange of the force-carrying bosons, and end up hitting the detector elements at glancing angles.

https://en.wikipedia.org/wiki/Lightest_supersymmetric_particle

In particle physics, the lightest supersymmetric particle (LSP) is the generic name given to the lightest of the additional hypothetical particles found in supersymmetric models.

SUSY search example: 4 leptons

ATLAS-CONF-2020-040 Search for supersymmetry in events with four or more charged leptons in

pp collisions with the ATLAS detector

https://twiki.cern.ch/twiki/bin/view/AtlasPublic/CONFnotes

https://en.wikipedia.org/wiki/Minimal_Supersymmetric_Standard_Model

A few practical steps and a simplified model predict 23 new particles

120-dimension parameters

Charginos and neutralinos will have a small mass. Grouped together as higgsinos

https://en.wikipedia.org/wiki/Higgsino

In particle physics, for models with N = 1 supersymmetry a higgsino, is the superpartner of the Higgs field.

They should all be quite close in mass.

The decay isn’t going to produce high energy particles and it will be difficult to separate them from the standard model processes.

Dr Potter introduced another particle, superpartner gravitino. This is a step towards marrying particle physics with gravity,

The gravitino is a brilliant candidate for dark matter.

The idea is to choose a mass for the higgsino and figure out how it decays to a gravitino. Then look for the signal this would leave in the detector.

L = lepton; b = b quark

The yellow cones in the above diagram represent leptons. The pink parts are an indication there is an imbalance of energy/momentum in the event. Something must have escaped. Something that couldn’t be detected. Escaping gravitinos?

Researchers need to be able to distinguish these particles from the standard model background.

Missing transverse energy needs to be identified

https://en.wikipedia.org/wiki/Monte_Carlo_method

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results.

Missing transverse energy referred to the filled histograms above of the standard model (SM). The empty ones refer to contribution expected from different signal hypotheses and they should stand out from the standard model with high missing energy values.

Analysis design does not just involve seeing where the signal should stand out.

Researchers use data to estimate the background and validate that they are doing the right thing with their background estimation.

They split the background estimation into lots of different backgrounds.

They may take a step away from the signal region with some disjointed selection and measure the rate of selection in relation to another selection. Then use that rate to apply it to a different control region. This will enable them to estimate the background from this signal region.

They could use the ratio from the two control regions (coloured green) to have a look at and apply to the (blue) control region and estimate what they would expect from the standard model in the signal region,

The researchers can use a lot of background information methods and really use the data given from the LHC. Understanding the uncertainty on the estimation is vitally important,

The above image shows the breakdown of the dominant systematic uncertainties in the background estimates for the signal regions. Theoretical uncertainties in the simulation-based estimates, including those on the acceptance of ZZ and ttZ simulations, are grouped under the “Theoretical” category, while the “Normalisation” category includes the statistical uncertainties on data counts in CRZZ and CRttZ and the uncertainty from the fitted normalisation factors. For simplicity, the individual uncertainties are added in quadrature for each category, without accounting for correlations. The total background uncertainty is taken from the background-only fit where correlations of individual uncertainties are accounted for.

There are lots of different sources of uncertainty. ATLAS has all sorts of detectors and they all have some kind of small uncertainty in measuring the momentum and energy of particles. You can see then as pink and green on the above uncertainty graph, sitting at about 5%. This shows that the ATLAS detector is incredibly precise and giving accurate measurements of the particles. The blue points are uncertainties coming from theories. Accuracies in calculating the rate of certain standard model background processes.

If the researchers have an uncertainty of 10% or 20% in their calculation of a rate of a process then it needs to be fed in as well. Coming up at about 15%.

All this information has to be put together to really understand the background estimation that is being made and to make sure that the methods used for background estimation are working.

Researchers can use some regions of data not being used for the signal region to check that they are modelling things like momentum of leptons or even missing energy successfully.

Above left: The distributions for data and the estimated SM backgrounds in the validation regions after the background-only fit, for the pT (e,μ) in VR0-noZ. “Other” is the sum of the tWZ, ttWW, ttZZ, ttWH, ttHH, tttW, and tttt backgrounds. The last bin includes the overflow. Both the statistical and systematic uncertainties in the SM background are included in the shaded band. Above right: The distributions for data and the estimated SM backgrounds in the validation regions after the background-only fit, for the ETmiss in VRZZ. “Other” is the sum of the tWZ, ttWW, ttZZ, ttWH, ttHH, tttW, and tttt backgrounds. The last bin includes the overflow. Both the statistical and systematic uncertainties in the SM background are included in the shaded band.

Data points are lining up in good agreement with the predictions. That gave Dr Potter confidence to trust the estimation.

Above left: The ETmiss distribution in SR0-ZZloose and SR0-ZZtight for events passing the signal region requirements except the ETmiss requirement. Distributions for data, the estimated SM backgrounds after the background-only fit, and an example SUSY scenario are shown. “Other” is the sum of the tWZ, ttWW, ttZZ, ttWH, ttHH, tttW, and tttt backgrounds. The last bin captures the overflow events. Both the statistical and systematic uncertainties in the SM background are included in the shaded band. The red arrows indicate the ETmiss selections in the signal regions. Above right: Expected and observed yields in the signal regions after the background-only fit. “Other” is the sum of the tWZ, ttWW, ttZZ, ttWH, ttHH, tttW, and tttt backgrounds. Both the statistical and systematic uncertainties in the SM background are included in the uncertainties shown. The significance of any difference between observed and expected yields is shown in the bottom panel, calculated with the profile likelihood method.

Looking at the signal region researchers have a trustworthy background estimation and they know the uncertainty. What is actually there? What is the data saying?

Selected regions of high missing energy and all of the data lined up very nicely with the background estimation.

All of the signal regions gave good agreement with the background estimation, however no discovery yet. Dr Potter feels she might need more data

Above left: Diagrams of the processes in the SUSY RPC GGM higgsino models. The W*/Z* produced in the χ1±/χ20 decays are off-shell (m 1 GeV) and their decay products are usually not reconstructed. The higgs may decay to leptons and possible additional products via intermediate ττ, WW or ZZ states.

Researchers can go back to the SUSY model e.g. Higgsino production decaying to gavitinos, and they can make some statements of that kind of SUSY.

If that kind of SUSY particle existed at that particular mass then if it decays in a particular way can it or should it be excluded.

Should they have been able to see it, or if not is it still in play.

Dr Potter’s analysis is covering the Higgsino masses up to around 550GeV in mass and it can really exclude things if they are decaying via the Higgs boson.

If the decay starts via the Higgs boson the sensitivity really drops off and Dr Potter can’t really say anything before lepton analysis if it’s got a branching ratio of about 80% to Higgs.

However, another analysis looking for 4b jets in the final state does start to cover this and each of the Higgs would start to decay to 4b jets.

So, these complimentary approaches for all sorts of different final states do really say something about a particular SUSY model. Also, there are still some things in play here (the large blue region in the above right image). There is a hole in the sensitivity (white section above the orange peak in the above right image).

Researchers know they need to target this gap with data analysis. They also need to push up to higher masses. These have a lower production rate at the LHC but as more data is taken more of these rarer events should be seen,

No SUSY yet but the LHC is pushing forwards to places that are hidden at the moment. There will be more difficult places for events to be detected but hopefully something will be seen which will allow researchers to characterise it.

Supersymmetry at the LHC

https://cds.cern.ch/record/2683428

Mass reach of the ATLAS searches for Supersymmetry. A representative selection of the available search results is shown. Results are quoted for the nominal cross section in both a region of near-maximal mass reach and a demonstrative alternative scenario, in order to display the range in model space of search sensitivity. Some limits depend on additional assumptions on the mass of the intermediate states, as described in the references provided in the plot. In some cases these additional dependencies are indicated by darker bands showing different model parameters. (ATL-PHYS-PUB-2019-022)

Mass in GeV comes from the famous formula, E = mc2 where energy E, in this case, is GeV, m is the mass and c are the speed of light in a vacuum. Rearranging the equation gives m = E/c2. Now because c is a constant, particle physicists consider mass to be equivalent to energy and give the mass in GeV (or TeV). Energy in eV is simply the energy in joules divided by the charge of the particle in coulombs (usually 1.6 x 10-19C, which is the charge on the electron)

So, the future is?

Discovery mode? à measure new physics!

Search mode? à more statistics/higher energy?

1) The HL-LHC

https://hilumilhc.web.cern.ch/content/hl-lhc-project

https://en.wikipedia.org/wiki/High_Luminosity_Large_Hadron_Collider

The High Luminosity Large Hadron Collider (HL-LHC; formerly SLHC, Super Large Hadron Collider) is an upgrade to the Large Hadron Collider started in June 2018 that will boost the accelerator’s potential for new discoveries in physics, starting in 2027. The upgrade aims at increasing the luminosity of the machine by a factor of 10, up to 1035 cm−2s−1, providing a better chance to see rare processes and improving statistically marginal measurements.

https://home.cern/science/accelerators/high-luminosity-lhc

https://home.cern/resources/faqs/high-luminosity-lhc

https://voisins.cern/en/high-luminosity-lhc-hl-lhc

The diagram below shows the location of the work required for the HL-LHC project.

The work at Point 2 (Sergy), Point 6 (Versonnex), Point 7 (Ornex) and Point 8 (Ferney-Voltaire) is exclusively underground and involves the replacement of existing equipment in the LHC. This work will take place mainly during Long Shutdown 2 (2019 – 2020).

The work at Point 4 (Echenevex) involves infrastructure both above and below ground: it will also take place during Long Shutdown 2cand essentially consists of modifications to the cryogenic system.

More significant work will be carried out at Points 1 (Meyrin) and 5 (Cessy), which house the two general-purpose experiments, ATLAS and CMS. Civil-engineering work will be required at these locations to create the space required for the new technical installations needed to increase the luminosity of the LHC.

Hopefully by 2035 HL-LHC will be characterising new physics and/or there will be a much greater chance of seeing rare new physics.

2) Build a set of complementary colliders for discovery and precision. These are either going to push our discoveries and/or give more precise measurements of old and new physics.

(a) One idea is to build a Higgs factory with a linear collider. It is hoped that it will increase the precision of data relating to the standard model

https://en.wikipedia.org/wiki/International_Linear_Collider

The International Linear Collider (ILC) is a proposed linear particle accelerator. It is planned to have a collision energy of 500 GeV initially, with the possibility for a later upgrade to 1000 GeV (1 TeV). Although early proposed locations for the ILC were Japan, Europe (CERN) and the USA (Fermilab), the Kitakami highland in the Iwate prefecture of northern Japan has been the focus of ILC design efforts since 2013. The Japanese government is willing to contribute half of the costs, according to the coordinator of study for detectors at the ILC.

The ILC would collide electrons with positrons. It will be between 30 km and 50 km long, more than 10 times as long as the 50 GeV Stanford Linear Accelerator, the longest existing linear particle accelerator. The proposal is based on previous similar proposals from Europe, the U.S., and Japan.

Studies for an alternative project, the Compact Linear Collider (CLIC) are also underway, which would operate at higher energies (up to 3 TeV) in a machine of length similar to the ILC. These two projects, CLIC and the ILC, have been unified under the Linear Collider Collaboration.

It is widely expected that effects of physics beyond that described in the current Standard Model will be detected by experiments at the proposed ILC. In addition, particles and interactions described by the Standard Model are expected to be discovered and measured. At the ILC physicists hope to be able to:

Measure the mass, spin, and interaction strengths of the Higgs boson

If existing, measure the number, size, and shape of any TeV-scale extra dimensions

Investigate the lightest supersymmetric particles, possible candidates for dark matter

To achieve these goals, new generation particle detectors are necessary.

https://en.wikipedia.org/wiki/Compact_Linear_Collider

The Compact Linear Collider (CLIC) is a concept for a future linear particle accelerator that aims to explore the next energy frontier. CLIC would collide electrons with positrons and is currently the only mature option for a multi-TeV linear collider. The accelerator would be between 11 and 50 km long, more than ten times longer than the existing Stanford Linear Accelerator (SLAC) in California, USA. CLIC is proposed to be built at CERN, across the border between France and Switzerland near Geneva, with first beams starting by the time the Large Hadron Collider (LHC) has finished operations around 2035.

The CLIC accelerator would use a novel two-beam acceleration technique at an acceleration gradient of 100 MV/m, and its staged construction would provide collisions at three centre-of-mass energies up to 3 TeV for optimal physics reach. Research and development (R&D) are being carried out to achieve the high precision physics goals under challenging beam and background conditions.

CLIC aims to discover new physics beyond the Standard Model of particle physics, through precision measurements of Standard Model properties as well as direct detection of new particles. The collider would offer high sensitivity to electroweak states, exceeding the predicted precision of the full LHC programme. The current CLIC design includes the possibility for electron beam polarisation.

The operators could tune the energy of collisions exactly to the Higgs mass and create more Higgs particles and collect lots of precise measurements of it.

CLIC accelerator with energy stages of 380 GeV, 1.5 TeV and 3 TeV

CLIC footprints near CERN, showing various implementation stages.

(b) An alternative to the new linear collider would be to build a new larger circular collider and accelerate particles like electrons, positrons or protons from the LHC into it.

https://home.cern/science/accelerators/future-circular-collider

https://en.wikipedia.org/wiki/Future_Circular_Collider

The Future Circular Collider (FCC) is a proposed post-LHC particle accelerator with an energy significantly above that of previous circular colliders (SPS, Tevatron, LHC). After injection at 3.3 TeV, each beam would have a total energy of 560 MJ. With a centre-of-mass collision energy of 100 TeV (vs 14 TeV at LHC) the total energy value increases to 16.7 GJ. These total energy values exceed the present LHC by nearly a factor of 30.

CERN hosted an FCC study exploring the feasibility of different particle collider scenarios with the aim of significantly increasing the energy and luminosity compared to existing colliders. It aims to complement existing technical designs for linear electron/positron colliders (ILC and CLIC).

The study explores the potential of hadron and lepton circular colliders, performing an analysis of infrastructure and operation concepts and considering the technology research and development programmes that are required to build and operate a future circular collider. A conceptual design report was published in early 2019, in time for the next update of the European Strategy for Particle Physics.

https://www.zmescience.com/science/news-science/new-lhc-plans-432

What the proposed new particle accelerator’s size could look like compared to the LHC. Credit: CERN.

The FCC study developed and evaluated three accelerator concepts for its conceptual design report.

FCC-ee (electron/positron) – collect more precise data for the standard model and act as a Higgs factory

A lepton collider with centre-of-mass collision energies between 90 and 350 GeV is considered a potential intermediate step towards the realisation of the hadron facility. Clean experimental conditions have given e+e− storage rings a strong record both for measuring known particles with the highest precision and for exploring the unknown.

More specifically, high luminosity and improved handling of lepton beams would create the opportunity to measure the properties of the Z, W, Higgs, and top particles, as well as the strong interaction, with increased accuracy.

It can search for new particles coupling to the Higgs and electroweak bosons up to 100 TeV. Moreover, measurements of invisible or exotic decays of the Higgs and Z bosons would offer discovery potential for dark matter or heavy neutrinos with masses below 70 GeV. In effect, the FCC-ee could enable profound investigations of electroweak symmetry breaking and open a broad indirect search for new physics over several orders of magnitude in energy or couplings.

FCC-hh (proton/proton and ion/ion) – will discover new things

A future energy-frontier hadron collider will be able to discover force carriers of new interactions up to masses of around 30 TeV if they exist. The higher collision energy extends the search range for dark matter particles well beyond the TeV region, while supersymmetric partners of quarks and gluons can be searched for at masses up to 15-20 TeV and the search for a possible substructure inside quarks can be extended down to distance scales of 10−21 m. Due to the higher energy and collision rate billions of Higgs bosons and trillions of top quarks will be produced, creating new opportunities for the study of rare decays and flavour physics.

A hadron collider will also extend the study of Higgs and gauge boson interactions to energies well above the TeV scale, providing a way to analyse in detail the mechanism underlying the breaking of the electroweak symmetry.

In heavy-ion collisions, the FCC-hh collider allows the exploration of the collective structure of matter at more extreme density and temperature conditions than before.

The proposed colliders will provide new information on the Higgs, probe the substructure of matter or make discoveries of the very heavy mass particles that are being looked for.

Summary

The LHC is mankind’s biggest experiment in history.

It is a large-scale experiment to probe fundamental particles.

It is a collaboration of countries and scientists/engineers whose shared knowledge pushes technology forwards for all to use.

There are high hopes of discovering new physics at the LHC.

Hopefully it will produce an explanation for shadowy dark matter.

Electroweak SUSY particles offer a natural solution to dark matter, but they will be extremely difficult to find.

Researchers will need to dig deeper into the data!

Particle physicists are looking forward and designing the next generation of colliders. They will be doing more “digging”, measuring new physics properties and continuing their quest for the discovery of dark matter and obtaining more precise data for the standard model.

Questions and answers

1) If the collisions between dark matter and the protons only happen once in a blue moon how can you prove this is a legitimate result and not an anomaly if it is unlikely to happen for a long time and you can’t replicate the result. How do we know it’s not a swizz?

You couldn’t announce the discovery from one single observation of what you thought could be your candidate event. You need to repeat it many different times to make it statistically significant and it comes down to understanding what other processes could look like for a signature like that.

Neutrinos are coming from the Sun all the time. They are very ghostly particles so they could look like the signal you are expecting. It’s understanding how you can discriminate between signal and background.

Unfortunately, some of the background will look like the expected the signal. You need to understand any simulations and theoretical calculation rates as well and be able to compare the expected background rate with the observations. You need to understand how compatible all the data is and that is where a lot of the statistical tools come in as well.

However, it is really hard. None of the experiments are going to be radiation quiet. Although every effort is used to remove radiation from laboratories and clean rooms it only takes a very rare nuclear decay that could spoil things by looking like the expected signal.

Xenon isotopes are used in detectors because their densities make dark matter interaction more likely and they permit a quiet detector through self-shielding. They also chemically inert.

Some detectors are placed underneath mountains to try and filter cosmic rays.

2) Have you ever been successful and produced any dark matter.

No. Dr Potter isn’t hiding any discoveries.

There is a possibility that it could have been down but it’s not statistically significant.

There is a high chance that the background could have fluctuated up to those levels within the data set being looked at.

Statistical tools as well as physical measurements are used to compare one number with another and understand if they are compatible.

Dr Potter hopes they are producing results but as they have not been seen they are at a rare rate and in a region that researchers are not yet looking at for the data selections.

Researchers need to look everywhere and take more data.

3) What about gravitons? Have you found any?

No. However searches are going on for them.

If the LHC was creating them you would expect them to decay to smaller standard model particles that are known.

Researchers are looking for a peak in the data and it should stand out quite clearly from an excess of events, like the Higgs hunt.

Because they haven’t been found yet experiments are being pushed to higher and higher energies so the possibility of finding them is beyond the current version of the LHC,

Dr Potter would love to “see” them and hope they can be incorporated with the rest of the fundamental forces.

4) What kind of tools do you use to fit models to the data? What kind of software do you use?

The software used is usually written by the people carrying out the experiment. It can’t be bought off the shelf or outsources. It is incredibly particular to the experiments and is usually written in C++ and python.

The software that governs the reconstruction of the particles from the detector is the very core of the experiment so that everyone is using the same thing. It enables everyone to use the same measurements, calibrations and uncertainties.

It is also important that everyone uses the same software to analyse the selected data. Tools are shared and become standard in the experiment. However, there are low level things that each experimenter writes for themselves.

Dr Potter writes code herself and shares it if it is successful. It can become standardised.

5) I’ve seen theories that dark matter isn’t real and that it is down to our misunderstanding of gravity on large scales. How confident are you that the whole basis of your career is justified?

Dr Potter feels that we must question these assumptions but everything shows that gravity is behaving correctly on small and large scales in the Universe.

Evidence of the correct behaviour of gravity is gravitational lensing.

https://en.wikipedia.org/wiki/Gravitational_lens

A gravitational lens is a distribution of matter (such as a cluster of galaxies) between a distant light source and an observer, that is capable of bending the light from the source as the light travels towards the observer. This effect is known as gravitational lensing, and the amount of bending is one of the predictions of Albert Einstein’s general theory of relativity.

Most of the gravitational lenses in the past have been discovered accidentally.

https://en.wikipedia.org/wiki/Bullet_Cluster

The Bullet Cluster (1E 0657-56) consists of two colliding clusters of galaxies. Strictly speaking, the name Bullet Cluster refers to the smaller subcluster, moving away from the larger one. It is at a comoving radial distance of 1.141 Gpc (3.72 billion light-years).

Gravitational lensing studies of the Bullet Cluster are claimed to provide the best evidence to date for the existence of dark matter.

Observations of other galaxy cluster collisions, such as MACS J0025.4-1222, similarly support the existence of dark matter.

X-ray photo by Chandra X-ray Observatory. Exposure time was 140 hours. The scale is shown in megaparsecs. Redshift (z) = 0.3, meaning its light has wavelengths stretched by a factor of 1.3.

Gravitational lensing enabled the centre of mass of the colliding galaxies to be found. It was located far beyond where the visible parts of the galaxies were because as the dark matter passed through it didn’t interact, it just kept on going. However, the visible matter from both galaxies did interact with each other and slow down.

Everything is telling us at the moment that our interpretation of gravity is correct and there is certainly dark matter out there.

6) What do you think of the Kaluza Klein theory as an explanation for dark matter?

https://en.wikipedia.org/wiki/Kaluza%E2%80%93Klein_theory

In physics, Kaluza–Klein theory (KK theory) is a classical unified field theory of gravitation and electromagnetism built around the idea of a fifth dimension beyond the usual four of space and time and considered an important precursor to string theory.

The idea that there are additional dimensions within our Universe but they are wrapped up on a tiny scale. We don’t experience them.

A way of understanding this is to think of an ant walking along a piece of rope. It can only go back and forth and side-to-side but can’t experience the third dimension.

We live in four-dimensions but some fundamental particles could experience more than four dimensions. This leads to an explanation of why gravity is so weak. Gravitons could experience these additional dimensions and this dilutes the experience of the force in our known dimensions compared to the other fundamental forces. The standard model particles could resonate through these extra dimensions and produce large quantities of new particles to look for. So, if we take the Z boson, we could have heavier versions of it, known as Z primes

https://en.wikipedia.org/wiki/W%E2%80%B2_and_Z%E2%80%B2_bosons

In particle physics, W′ and Z′ bosons (or W-prime and Z-prime bosons) refer to hypothetical gauge bosons that arise from extensions of the electroweak symmetry of the Standard Model. They are named in analogy with the Standard Model W and Z bosons.

Finding Z-primes would be evidence of new physics. Researchers are actively searching for them within ATLAS and other LHC experiments. However, there are competing theories. Until something is found everything is on the table.

7) Is doing the actual experiments harder than coming up with the theory?

Finding the funding, building the laboratories and creating the equipment is, in a sense, incredibly difficult. The LHC took a long time to come into fruition. However once everything is built and running the difficulty lessens and the physics can be explored.

Experimenter do need theorists to give accurate predictions of what is expected of the standard model.

So much data is taken that some of the limitations in accuracy gets carried across from calculations.

Uncertainties in rate calculation will become a dominating part of any future collider experiments.

The needs of the building and running of the apparatus needs to marry up with the theory. They both need to happen correctly. So, if the future circular collider is built but the required theory has not caught up then there is no point running it.

Pushing the boundaries of the maths as well as pushing the boundaries of physics are equally important.

8) What really is supersymmetry and has it been proven?

There is symmetry in time and space. If you did a physics experiment yesterday and repeated the experiment (under exactly the same conditions) today you would get the same result. The physics has not changed. Like the conservation of energy. Energy cannot be created or destroyed. The quantity must always remain the same.

Supersymmetry is the last thing that can be investigated.

https://en.wikipedia.org/wiki/Symmetry_(physics)

In physics, a symmetry of a physical system is a physical or mathematical feature of the system (observed or intrinsic) that is preserved or remains unchanged under some transformation.

A type of symmetry known as supersymmetry has been used to try to make theoretical advances in the Standard Model. Supersymmetry is based on the idea that there is another physical symmetry beyond those already developed in the Standard Model, specifically a symmetry between bosons and fermions. Supersymmetry asserts that each type of boson has, as a supersymmetric partner, a fermion, called a superpartner, and vice versa. Supersymmetry has not yet been experimentally verified: no known particle has the correct properties to be a superpartner of any other known particle. Currently LHC is preparing for a run which tests supersymmetry.

Dr Potter talked about particles with spin. Every boson should have a superpartner fermion. Same with the fermion. The electron is a fermion. We haven’t found a boson like an electron.

These superpartners must be slightly broken in symmetry. They must be sitting at a higher mass to explain why they haven’t been seen

https://en.wikipedia.org/wiki/Supersymmetry_breaking

In particle physics, supersymmetry breaking is the process to obtain a seemingly non-supersymmetric physics from a supersymmetric theory which is a necessary step to reconcile supersymmetry with actual experiments. It is an example of spontaneous symmetry breaking.

https://en.wikipedia.org/wiki/Spontaneous_symmetry_breaking

In particle physics the force carrier particles are normally specified by field equations with gauge symmetry; their equations predict that certain measurements will be the same at any point in the field. For instance, field equations might predict that the mass of two quarks is constant. Solving the equations to find the mass of each quark might give two solutions. In one solution, quark A is heavier than quark B. In the second solution, quark B is heavier than quark A by the same amount. The symmetry of the equations is not reflected by the individual solutions, but it is reflected by the range of solutions.